Ensuring reliable and trusted experiences for communication and collaboration in the hybrid work era

Summary:

As the workplace moves to a hybrid model, organizations are tasked with supporting the communication and collaboration needs of employees across a diverse range of workstyles. As Microsoft Teams became a critical tool in allowing people to do their jobs or attend school remotely, it became more important than ever to ensure availability and reliability of the service.

This has motivated us to invest heavily in enhancements to the Teams service to ensure its reliability.

Several factors play into the quality and reliability of a service that’s used globally by millions of people every day. Variability in network bandwidth and connection speeds, a wide variety of devices being used to access the service, as well as new and changing user expectations all play a role. As we look at this challenge, we incorporate core Microsoft cloud principles into everything we build and apply proven patterns to our designs.

Building on our previous blog on our efforts to enhance the performance of Teams, we want to share additional insights into key investments we’re making to also bolster the reliability of the service. We define reliability as a failure-free experience where the service is available all the time.

Designing for resiliency

We start by assuming failures may occur in any aspect of the service or user experience during normal operations, including sub-components like a virtual machine or an API fault. Based on that assumption, we have built in automatic detection and mitigations to self-heal and recover with little or no impact on users. We have put a lot of effort into to defining resilience patterns and have found that producing shared libraries and generic implementations are more impactful than just documenting best practices. As the Teams product has matured and usage patterns have changed, different strategies are required.

To harden the Teams client, key resiliency patterns include retry with jittered backoff, caching strategies, circuit breakers, fallback patterns, bulkhead isolation, and rate limiting. To harden our service, key resiliency patterns include moving to an active-active architecture, removal of single points of failures, and investing in observability.

We are working to drive all aspects of the infrastructure to an active-active architecture. Prior to COVID, Teams maintained considerable dark capacity (unused capacity primarily there for build rollback) that became increasingly expensive as our scale expanded and our release quality improved. Active-active is when there are two or more operationally independent heterogenous paths or, in some cases, multiple instances of single stack (homogenous) paths. Each path not only serves significant live traffic at steady state but also has the capability to handle traffic from a location that is moved out of rotation (we refer to this as the disaster recovery buffer) while leveraging client/protocol path-selection for seamless failover. A key advantage of the active-active design is to achieve load balancing, and, in the event of a failure, traffic is automatically routed to a healthy location.

No single point of failure

A single point of failure is any part of the system where a failure will stop core scenarios in Teams from operating. We strive to identify and remove all single points of failures and prioritize this work based on potential impact to the service. Example areas of investment have been to ensure we have active / active redundancy for Domain Name Server (DNS), Content Delivery Network (CDN), network ingress, traffic management, and authentication / authorization resiliency. These investments can then be tested via fault injection to ensure the user experience is not impacted in the event of failure during normal operations.

Observability

We focus on availability and performance metrics, as measured from the user’s device, to best represent the user experience. The observability from the client perspective is ideal for detecting any reliability issues that may be identified—from problems experienced by a specific tenant to degradation seen in a country to regional and global health issues. Additional examples of metrics monitoring across all locations include service health, API (application programming interfaces) responses, API dependencies, error logging, network/routing errors, and bandwidth. Leveraging these metrics, we try to decrease the time it takes to detect, diagnosis, communicate, and fix customer impacting events. Alerts are then triggered if any degradation is seen in any of the metrics, and this is backed up with 24×7 support.

Granular fault isolation to reduce impact of outage

Operations must continue with zero downtime in the event of a failure or disaster. Redundancy across the Azure global footprint and managing the blast radius to reduce the impact of any outage is a key part of this strategy. Two key areas of investment here have been to utilize partitioning for blast radius reduction and to achieve fault tolerance through replication and redundancy.

Partitioning for blast radius reduction

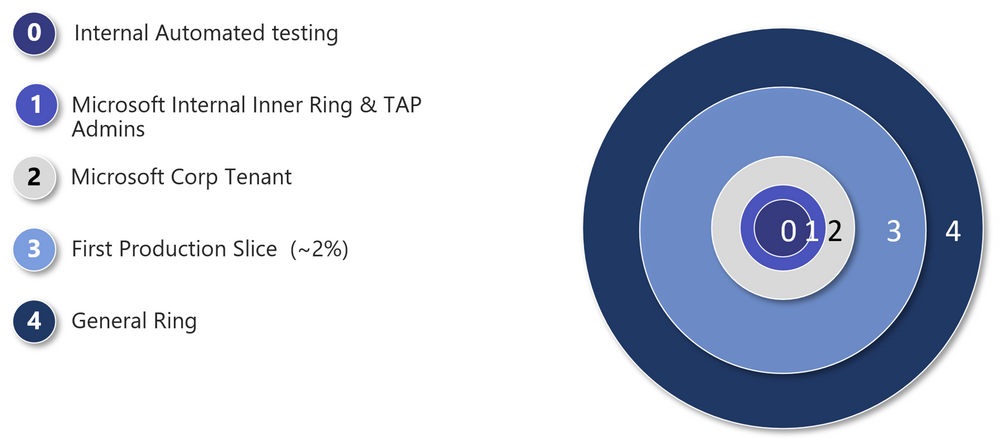

In the event of a fault, the goal is to minimize the number of people affected and limit exposure to a contained partition. To do this, we push both logical and physical mechanism changes through a set of deployment rings. The basic idea is that when we deploy a change, configuration, or code, we gradually deploy and validate our changes with a small set of users and then expand to a higher ring once metrics meet their targets, feedback has been gathered, and gates have been passed. Deployment to sovereign clouds follows deployment to the public cloud. The benefit of this approach is that the team gets early feedback, and it limits the number of impacted users if a system failure is introduced.

Some key best practices applied to the rings are:

- Slow rollout across rings is required

- Capabilities pass through rings based on quality criteria

- Automated gates are leveraged to verify functionality, performance, and health

- Chaos/GameDay fault injections can be applied to a ring

- Teams are required to use percentage-based rollouts in the General ring

- Use of A/B testing to verify behavior and impact on core metrics

Fault tolerance through replication and redundancy

Data redundancy across data center regions is leveraged to achieve fault tolerance. In the three major geographies of the Americas; Europe, Middle East, and Africa (EMEA); and Asia Pacific (APAC), the data typically is replicated across three to four data centers. For countries where we offer data residency in country, the data is replicated across a minimum of two availability zones and, in most cases, across at least two data centers. Any failure in an individual location can be mitigated by directing traffic to one of the other locations without disruption to the users.

Safe change management

As a modern service, change is continuous to bring improvements to our users. As we do so, we must have system capacity, processes, and automation in place to reduce the risk associated with making those changes. Two key areas here are automated deployment pipeline across gated rings worldwide and the Security Development Lifecycle.

Automated deployment pipeline across gated rings worldwide

As changes are applied, they are pushed through an automated pipeline that features validation across a set of early rings before it is released to all users. This occurs via a percentage-based rollout. The validation leverages tests, which act as gates, assessing important criteria such as scenario completion, performance, security, privacy, accessibility, and availability.

Changes also are validated via A/B experiments to compare metrics against the existing experience to ensure that the change is not causing degradation of core metrics or user experience. A degradation seen in a test will auto-halt the progression of that change from going to the next ring. To provide flexibility and greater control, Teams heavily utilize feature flags to decouple deployment from exposure of new features. If an issue occurs due to a change, the feature flag can easily be reverted via a configuration that can be applied in minutes. Clients are then auto updated to ensure the best user experience is provided, all security and privacy fixes are applied, and the test matrix is reduced to confidently ensure high quality.

Security Development Lifecycle

The Microsoft Security Development Lifecycle (SDL) has always been a critical practice applied to Microsoft Teams from day one. The SDL introduces security, privacy, and compliance considerations through all phases of the development process. Threat models are created; data classification is conducted; data-handling policies are applied; and tools, processes, security, and privacy reviews are conducted. We also leverage third-party penetration testers and third-party auditors to validate compliance. Additionally, we offer a bug bounty program in which researchers around the world help us harden the software and experience.

Microsoft commitment to your Teams experience

Microsoft Teams works together with the broader Microsoft 365 organization to learn from each other, standardize best practices, and evolve our collective services toward ever greater reliability. We foster a culture that keeps reliability as a top-of-mind priority from the early design phases to the run state. We need our users to trust that our services are there for them and that they will always be available. This is a wide area for investment by Microsoft, and we will continue to push the boundaries for what is possible.

We will be happy to hear more from you in this domain to learn and continue to improve. We also intend to publish additional blogs for key topics called out in this overview blog. Please share feedback and upvote your performance request in our feedback portal.

Date: 2022-03-21 15:00:00Z

Link: https://techcommunity.microsoft.com/t5/microsoft-teams-blog/ensuring-reliable-and-trusted-experiences-for-communication-and/ba-p/3261438